High-Availability Database Cluster Management Tool for OpenResty Edge

Introduction

OpenResty Edge DB Cluster Management Tool is a command-line utility for managing Database high-availability clusters. It helps users quickly set up and manage a Database cluster with monitor and data nodes.

Architecture

The cluster consists of:

- One monitor node: Manages the cluster state and coordinates failover

- Two or more data nodes: One primary and one or more standby nodes

System Requirements

- Operating System: Linux (with systemd support)

- Memory: 2 GB minimum

- Disk Space: 5 GB minimum, should be sized according to actual database storage requirements. Recommended configuration:

- Monitor nodes: 50 GB

- Data nodes for Admin DB: 200 GB or more

- Data nodes for Log Server DB: 500 GB or more

- Software: OpenResty PostgreSQL 12 or higher

- Firewall Ports: 5432 (PostgreSQL)

- Network: All nodes must be able to communicate with each other

Features

- Install, start, and stop monitor and data nodes

- Query database cluster status

- Manage Database HBA(Host-Based Authentication) configuration

- Support cluster switchover operations

- Automatic failover capability

Important Notes

- Test in a non-production environment first

- Please always backup important data before installation, you can refer to this document

- Perform operations during maintenance windows

- When switching monitor nodes, perform switchover on the primary data node first (if alive), then perform switchover on the secondary data nodes

- Use the

-yoption with caution as it skips all confirmations and may lead to data inconsistency, corruption, or loss.

Installation Steps

Download the management tool:

curl -O https://openresty.com/client/oredge/openresty-edge-db-cluster-manager.shInstall monitor node:

Note: The Monitor node must be configured and installed on a brand new machine. Executing it on a machine that already contains a database will result in data loss.

First, use the installer openresty-edge-install.sh to install OpenResty Edge Admin Database or OpenResty Edge Log Server Database to initialize the environment.

sudo bash openresty-edge-db-cluster-manager.sh -a install -t monitor -n MONITOR_IPInstall one or more data nodes: (The first successfully installed node will become the primary node)

Note: When installing the primary node, you can continue using an existing database instance or perform a fresh installation on a new machine. Do NOT perform a fresh installation on an existing database instance with data, as this will result in data loss.

If you want to perform a fresh installation, please refer to either of these two documents for database initialization: OpenResty Edge Admin Database or OpenResty Edge Log Server Database.

Execute the following command to set up the database as a data node for the database cluster:

sudo bash openresty-edge-db-cluster-manager.sh -a install -t data -m MONITOR_IP -n NODE_IPCheck the cluster state:

sudo bash openresty-edge-db-cluster-manager.sh -a get -c "cluster state" -m MONITOR_IP

Command Usage

Basic Syntax

sudo bash openresty-edge-db-cluster-manager.sh [options]

Command Options

-a, --action Action (install/start/stop/get/set/switchover/uninstall)

-t, --type Node type (monitor/data)

-m, --monitor-ip Monitor node IP address

-n, --node-ip Current node IP address

-v, --pg-version PostgreSQL version (default: 12)

-y, --yes Skip all confirmations and use default values

(Warning: This will auto-confirm destructive operations)

-c, --config Configuration type (get: cluster state/node type/service status, set: pghba)

-i, --pg-hba-ips PostgreSQL hba IPs (comma separated)

-s, --switch-type Switchover type (switch to new monitor)

-h, --help Show help message

Common Usage Examples

Run without any options to perform all operations interactively:

sudo bash openresty-edge-db-cluster-manager.sh

Install monitor node:

sudo bash openresty-edge-db-cluster-manager.sh -a install -t monitor -n 192.168.1.10

Install data node:

sudo bash openresty-edge-db-cluster-manager.sh -a install -t data -m 192.168.1.10 -n 192.168.1.11

Check cluster status:

sudo bash openresty-edge-db-cluster-manager.sh -a get -c "cluster state" -m 192.168.1.10

Get node type:

sudo bash openresty-edge-db-cluster-manager.sh -a get -c "node type"

Configure PostgreSQL HBA:

sudo bash openresty-edge-db-cluster-manager.sh -a set -c pghba -t data -i "192.168.1.10,192.168.1.11"

Switch to new monitor:

sudo bash openresty-edge-db-cluster-manager.sh -a switchover -s "switch to new monitor" -m 192.168.1.12

Uninstall node:

sudo bash openresty-edge-db-cluster-manager.sh -a uninstall -t monitor

Monitor Node Switchover Scenarios

This section describes detailed steps for monitor node switchover in different scenarios. The initial cluster consists of: 1 monitor node, 1 primary data node, and 1 secondary data node.

Important Notes:

- Ensure the new monitor node is properly installed and running before performing switchover

- Database service may be briefly affected during switchover

- Verify cluster state after each switchover

- Perform switchover during maintenance windows

- Backup important data before operation

- Keep detailed operation logs for troubleshooting

- When the monitor node is functioning normally, switchover is usually unnecessary. If you wish to perform a switchover, please stop the monitor node first.

You can use the following command on data nodes to get the node type:

sudo bash openresty-edge-db-cluster-manager.sh -a get -c "node type"

- monitor: Monitor node

- primary: Primary data node

- secondary: Standby data node

Scenario 1: Recoverable Monitor Node

When the monitor node experiences temporary failure but is recoverable, there is no need to perform monitor node switchover:

Fix the monitor node issue

Restart the monitor node service:

sudo bash openresty-edge-db-cluster-manager.sh -a start -t monitorVerify cluster state:

sudo bash openresty-edge-db-cluster-manager.sh -a get -c "cluster state" -m MONITOR_IP

Scenario 2: Unrecoverable Monitor Node with All Data Nodes Normal

When the monitor node cannot be recovered, but all data nodes are running normally, migrate all data nodes to a new monitor node.

Install new monitor node on a new server:

sudo bash openresty-edge-db-cluster-manager.sh -a install -t monitor -n NEW_MONITOR_IPSwitch primary data node to new monitor node:

sudo bash openresty-edge-db-cluster-manager.sh -a switchover -s "switch to new monitor" -m NEW_MONITOR_IPPerform the same switchover operation on secondary data node:

sudo bash openresty-edge-db-cluster-manager.sh -a switchover -s "switch to new monitor" -m NEW_MONITOR_IPVerify cluster state:

sudo bash openresty-edge-db-cluster-manager.sh -a get -c "cluster state" -m NEW_MONITOR_IP

Scenario 3: Monitor and Secondary Node Failure, Primary Node Normal

When both monitor node and secondary data node fail, but primary data node is running normally:

Install new monitor node on a new server:

sudo bash openresty-edge-db-cluster-manager.sh -a install -t monitor -n NEW_MONITOR_IPSwitch primary data node to new monitor node:

sudo bash openresty-edge-db-cluster-manager.sh -a switchover -s "switch to new monitor" -m NEW_MONITOR_IPVerify cluster state:

sudo bash openresty-edge-db-cluster-manager.sh -a get -c "cluster state" -m NEW_MONITOR_IPFix or reinstall secondary node (optional)

Scenario 4: Monitor and Primary Node Failure, Secondary Node Normal

When both monitor node and primary data node fail, but secondary data node is running normally:

Install new monitor node on a new server:

sudo bash openresty-edge-db-cluster-manager.sh -a install -t monitor -n NEW_MONITOR_IPSwitch secondary data node to new monitor node:

sudo bash openresty-edge-db-cluster-manager.sh -a switchover -s "switch to new monitor" -m NEW_MONITOR_IPWhen prompted “Will promote current node to primary. Continue?”, select “y” to promote this node to primary.

Verify cluster state:

sudo bash openresty-edge-db-cluster-manager.sh -a get -c "cluster state" -m NEW_MONITOR_IPFix or reinstall original primary node as secondary node (optional)

Logs and History

- Each operation creates a separate history directory:

oredge-db-cluster-histories/<timestamp> - All operation logs are saved in

oredge-db-cluster-histories/<timestamp>/script.log

Error Handling

If you encounter errors:

- Check the log file for detailed information

- Verify network connectivity between nodes

- Confirm that system requirements are met

- Ensure there are no port conflicts

- Verify database service installation

FAQ

What happens during a monitor node failure?

- The data nodes continue to operate

- You can switch to a new monitor node using the switchover command

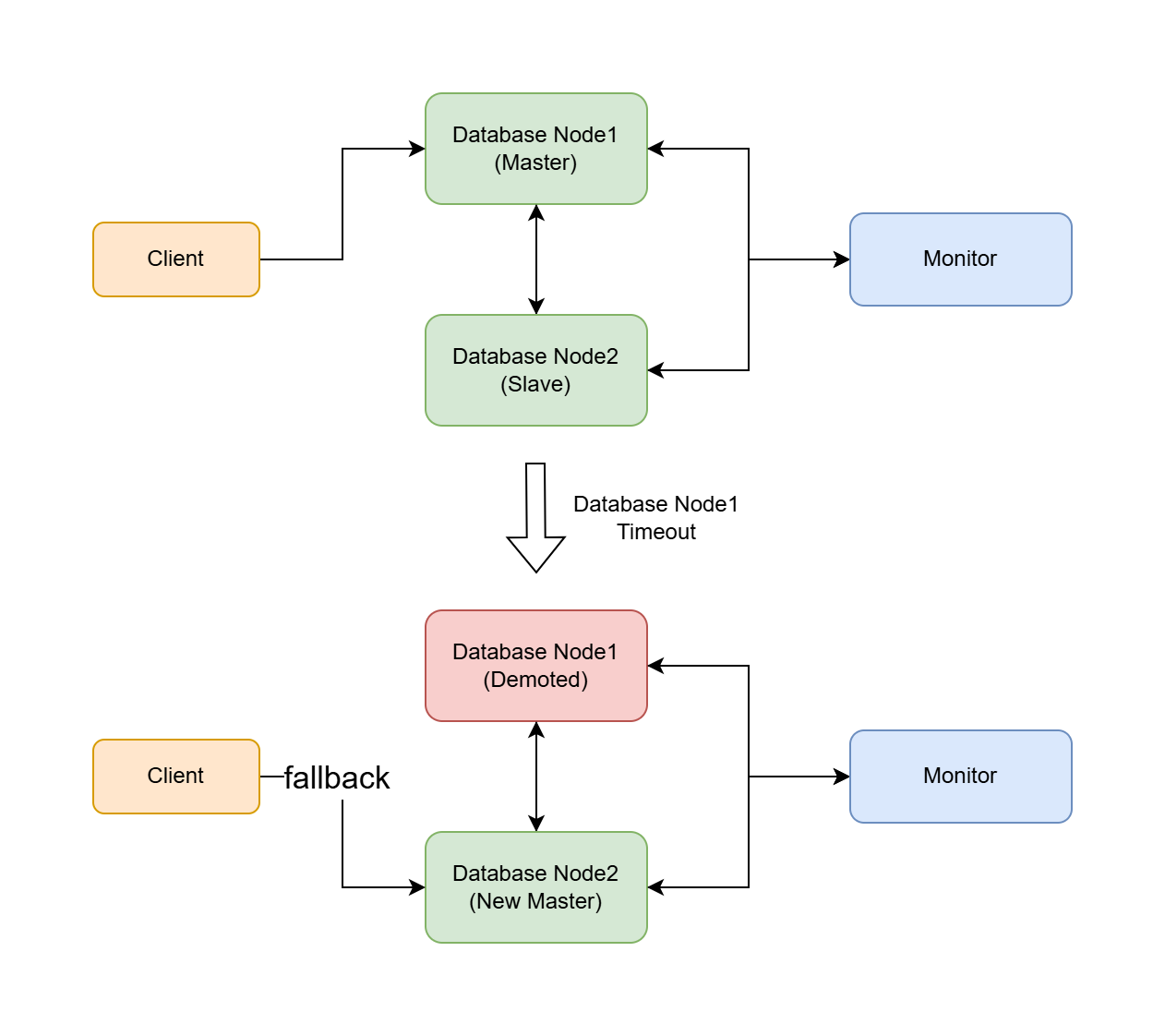

How is automatic failover triggered?

- The monitor node continuously checks data node health

- If the primary node fails, a standby is automatically promoted

Can I add more nodes to an existing cluster?

- Yes, use the install command with data node type

- Existing data on the new node will be cleared and automatically synchronized with the primary node

What should I do if a node fails to start?

- Follow the Error Handling section

- Contact us at support@openresty.com for assistance